- Artificial intelligence (AI) is going to transform business and the economy, but what are its potential benefits and downsides?

- Everyone in business has a role to play in ensuring AI is truly a force for good.

- We need to approach innovation with the right motivations and accountability mechanisms to ensure AI’s promise becomes reality.

It’s hard to overstate the impact that artificial intelligence (AI) is going to have on every aspect of business and the economy. In the tech industry, we have long understood its potential. Much of the rest of the world woke up to its transformational power more recently, particularly following last year’s launch of ChatGPT.

Naturally, that awesome power has raised a slew of questions. What are AI’s potential benefits and downsides? Will the former outweigh the latter? What will AI do to productivity and jobs? Will it exacerbate inequality? Where is it going to take us? Will we be able to control AI, or will it control us? Each of these questions merits a healthy debate.

As a veteran of the tech industry and as an investor whose firm’s motto is “people first,” I bring an unabashedly optimistic perspective to the conversation. But optimism doesn’t mean complacency. Everyone in business – from the startups creating AI technologies, to the companies adopting machine learning algorithms to supercharge their products or operations – has a role to play in ensuring AI is truly a force for good.

Here’s why I’m optimistic, and what I think companies big and small should be doing today to ensure a positive outcome.

AI will augment humans, not replace them

Those of us who have been around the tech industry for years have seen it again and again – powerful emerging technologies raise fears about jobs. And certainly, new forms of automation eliminate some jobs. But they also create efficiencies and streamline repetitive tasks, allowing humans to move up the value chain.

Ultimately, more jobs are created. Personal computers, for example, eliminated some jobs for typesetters but helped to create far more employment through desktop publishing. I am convinced that AI will have that kind of effect, but on a much larger scale. It will create massive productivity gains that will allow businesses to invest more, innovate more and generate new jobs along the way.

But that’s just one part of the story. I also believe AI will give us new superpowers that will make our work more satisfying and our lives richer, leading us into an era I think of as “human squared.”

How? First, our way of interacting with technology will change. Going forward, our primary way to communicate with computers will be through rich and layered conversations. Perhaps more importantly, for the first time, technology will be able to perform cognitive tasks that augment our own capabilities.

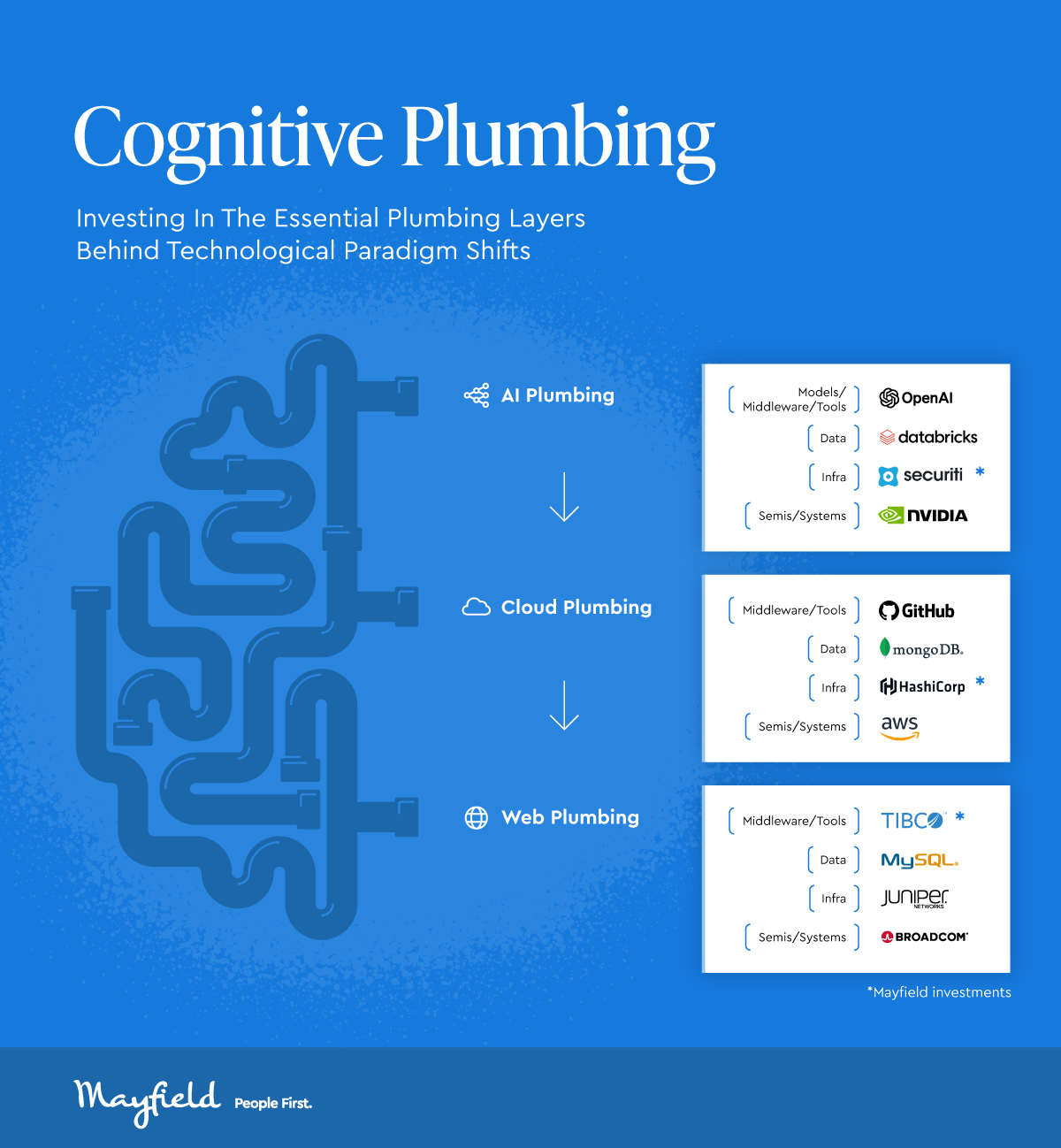

Rather than merely speed up and automate repetitive tasks, AI will generate net new things much like humans do. The result is that we’ll be able to multiply our own capabilities with a human-like assistant. Whether you call it an intelligence agent (AI becomes IA), copilot, teammate, coach, genie or something else, it will make us immensely more capable, regardless of the task we are performing. I believe that we are witnessing a whole new layer of cognitive plumbing that is being built to drive this wave.

Founders should build trustworthy companies from day one

The entrepreneurs creating tomorrow’s AI systems and applications all face choices in how they develop and harness the technology. That’s why, as an investor in startups, I encourage all founders to build trustworthy companies from day one.

What do I mean by that? One of the first things I do when I meet founders is to probe their values. I want to know whether they are driven by human-centric mission, and whether their vision for how to deploy technology is aligned with ours.

These are probing and broad ranging conversations that inevitably cover a few key topics:

- Trust and safety can never be an afterthought. In the past year, the potential pitfalls of AI – whether it is hallucinations, lack of transparency, inequity, bias, deep fakes, copyright infringement or other issues – have been well publicized. If AI is going to be a force for good, founders must not only be aware of them but also determined to address them. They must evaluate the trustworthiness of the models they develop and use, and ensure that they are compliant with a nascent but rapidly growing regulatory regime.

- Data privacy is a human right. AI is fuelled by data and companies should treat that data responsibly. That means not only complying with regulatory regimes, but also embracing ethical practices around its use. That ranges from transparency about what they will and won’t do with it, to the handling, classification, and security of sensitive data, the careful inventory, lineage, retention and consent to use of everything that goes into AI models. The time to put guardrails around data practices is now, not after breaches or privacy violations are exposed and it’s too late to prevent harm.

Startups should not only dedicate themselves to AI safety, accountability, and averting harms, but also state their commitments publicly, as, for example, Anthropic has done, which should serve as a model for others to emulate.

Responsible AI is a practice, not a checklist

Just like the companies creating AI technologies, those that are deploying them have choices to make and, I would argue, a duty to do so responsibly. I’m encouraged by what I’m seeing and hearing.

Whether it’s through our annual survey of CIOs (chief information officers) or in conversations across the industry and at events like the World Economic Forum’s Annual Meeting in Davos, I have noticed a sense of collective urgency among many business leaders to do the right thing.

But that’s easier said than done. Implementing responsible AI practices across an organization is a challenge that requires resources, commitment and leadership. Like anything in business, it must begin with an adequate budget and a mechanism for accountability.

The person or group that oversees it needs to have the visibility and stature within the organization to be able to convene stakeholders – across tech, legal, compliance, audit, and other functions – and influence decisions. Importantly, the CEO and board should know how those groups are working together and the roles each has to play.

Some companies are waiting for regulatory regimes to force their hand. I think that’s a mistake. Deploying AI responsibly, and doing it in a way that doesn’t slow the pace of innovation, is not like flipping a switch.

Those who aren’t laying out thoughtful plans today are already behind. For those who don’t know where to start, a growing number of certification programmes from organizations like the Responsible AI Institute can help lead the way. The time to do so is now.

AI is already improving our economy and wellbeing in myriad ways, including higher worker productivity, more accurate health diagnoses, new forms of drug discovery, better decision-making, to name a few. But that’s just the beginning.

I am certain that businesses and entrepreneurs will be able to harness AI technology in new ways that will unlock untapped human potential and benefits on an unprecedented scale. We just need to approach innovation with the right motivations and accountability mechanisms to ensure that promise becomes reality.

This column was originally published on the World Economic Forum website.