No matter where I look, everyone is talking about artificial intelligence. In the world that EE Times addresses, we often talk about the tech innovations, the breakthroughs, the chips, the software and the tools to build the systems that allow systems developers and manufacturers to integrate the AI technology. But we don’t talk enough about the risks and the dangers of AI.

There are clearly dangers.

We don’t know the whole story behind the OpenAI boardroom battle last month, but some seem to think it was due to substandard safety guardrails and frighteningly rapid tech development. And then there was the incident in October of the Cruise robotaxi that didn’t detect a pedestrian and only came to a stop on top of the person.

There’s also potentially an ugly side to AI, as highlighted in a paper to be presented at next week’s conference on neural information processing systems in New Orleans (NeurIPS 2023). It suggests that AI networks and tools are more vulnerable than previously thought to targeted attacks that effectively force AI systems to make bad decisions.

The researchers from North Carolina State University’s Department of Electrical and Computer Engineering developed a piece of software called QuadAttacK to test the vulnerability of deep neural networks to adversarial attacks. The software determines how data can be manipulated to fool an AI network—and see how the AI responds. Once it identifies a vulnerability, it can quickly make the AI see whatever QuadAttacK wants it to see.

The implication of this is explained by Tianfu Wu, co-author of a paper on the new work and an associate professor at the university. “If the AI has a vulnerability, and an attacker knows the vulnerability, the attacker could take advantage of the vulnerability and cause an accident,” he said. “This is incredibly important, because if an AI system is not robust against these sorts of attacks, you don’t want to put the system into practical use—particularly for applications that can affect human lives.”

This is particularly of concern when you think about recent talk about the use of autonomous weapons systems. Just imagine what would happen if someone clever understood the vulnerabilities in the system. For those of us of a certain age, it brings back memories of cartoons in which the megalomaniac bad actors wanted to “take over the world.”

There is no doubt that the risks are real. But we could use more debate about the bad and the ugly side of AI—especially when AI is here, there and everywhere, both now and in the future.

To address this aspect of the debate, EE Times and Silicon Catalyst partnered to stage a webinar with panelists addressing social responsibility, regulatory and investor perspectives on the less-spoken side of AI.

On the panel, which I moderated, we unleashed Jama Adams, COO of Responsible Innovation Labs (RIL); Navin Chaddha, managing partner at Mayfield; and Rohit Israni, chair AI standards for the U.S. (INCITS/ANSI) to explore the good, the bad and the ugly of the AI wonderland—from the lenses of social impact, investment strategies and regulatory oversight.

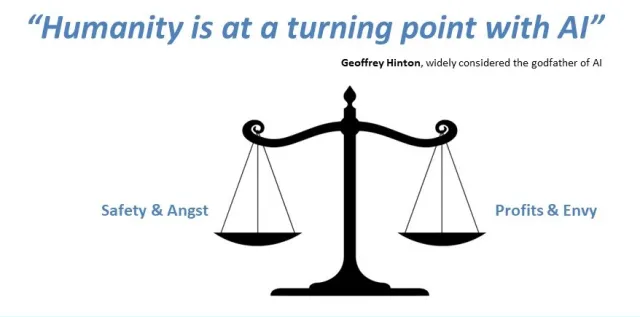

There are clearly tensions between profits and safety. As Geoffrey Hinton, whom some call “the godfather of AI,” said, “Humanity is at a turning point with AI.”

The one-hour long webinar delves into the “bigger picture” aspects of how AI will shape our lives. The debate explores the balancing act between:

The EE Times–Silicon Catalyst webinar will help give you a sense of the rapidly evolving AI landscape and understand the current state of the industry and how regulatory oversight and investment decisions need to take into account the impact of AI on humanity.

The webinar is particularly timely, as RIL launched the industry-driven voluntary Responsible AI Commitments and Protocol for startups and investors—to which more than 50 VCs have signed up. As part of the launch, RIL hosted a roundtable with VCs and U.S. Secretary of Commerce Gina Raimondo. Raimondo said that we are still in the early stages of the AI era, and there’s a need to “hold ourselves to account, to make sure that the way AI is developing is safe, trustworthy, secure, non-discriminatory, ethical and humane.”

One of the 50 VCs signed up to this protocol is Mayfield, and in the webinar, Chaddha explains both their investment strategies for AI startups and how the Responsible AI Commitments initiative impacts their thinking.

To view and replay the complete webinar, “Navigating Our AI Wonderland: the Good, the Bad and the Ugly,” click here.

The webinar follows recently staged events around AI by both EE Times and Silicon Catalyst.

“AI Everywhere” delivered via a series of web sessions:

“Welcome to Our AI Wonderland”—The 6th Annual Semiconductor Industry Forum:

The key point of all the societal responsibility debate is that the industry needs to take into account the dangers that AI can pose.

In his closing remarks during the webinar on the good, the bad and the ugly of AI, Mayfield’s Chaddha said, “I think it’s a great time to start a company and do it in a balanced way.

“Do well financially, but also do good for the world.”

Nitin Dahad is Editor in Chief of embedded.com, as well as a correspondent for EE Times. An electronic engineering graduate from City University, he’s been an engineer, journalist and entrepreneur. He was part of ARC International’s startup team and took it public, and he co-founded a publication called The Chilli in the early 2000s. Nitin has also worked with National Semiconductor, GEC Plessey Semiconductors, Dialog Semiconductor, Marconi Instruments, Coresonic, Center for Integrated Photonics, IDENT Technology and Jennic. Nitin also held a role with government promoting U.K. technology globally in the U.S., Brazil, Middle East and Africa, and India.